Unravelling the Mysteries of AI in Video Games

Revealing the magicians' secrets

When talking about Artificial Intelligence (AI) in the context of video games, more often than not what we are actually referring to is cleverly written code. This code is written in just such a way that to the player it appears intelligent and sophisticated but is actually all just smoke and mirrors. There are some exceptions to this, such as Drivatars in the Forza Motorsports series, but for the most part, developers tend to stick to the tried and tested paradigms that I'll be discussing in this article.

Quick disclaimer: most of my knowledge on this topic stems from an epidemiological background and implementing 'AI' into Agent-based models and so whilst the concepts are fundamentally the same, the implementation might slightly differ.

Finite State/Hierarchical State Machines

The year is 1998, it's November and a little-known company called Valve has just taken the gaming world by storm, completely revolutionising the 1st person shooter as we know it with their hit debut game Half-Life.

What became a staple of the 2000s in AI design, Finite State Machines (FSMs) were first popularized in 1998's Half-Life and became one of the game's many celebrated features. The concept itself is quite simple: an agent can exist within a certain state, be that taking cover, searching, reloading, or shooting, to name just a few. And the agent can transition between these states, given certain conditions are satisfied. On top of this Half-Life also has schedules and goals.

- States

- Within the C++ code for Half-Life states are known as tasks

- States are discrete and so can't overlap each other. This is why when a grunt is shooting at you and when it retreats to cover it stops shooting.

- Schedules

- For the AI in Half-Life it is sometimes necessary to complete a set of tasks to get to the desired point

- Schedules can be strung together to form goals

- Goals

- There are only five goals for AI in Half-Life

- GOAL_ATTACK_ENEMY

- GOAL_MOVE

- GOAL_TAKE_COVER

- GOAL_MOVE_TARGET

- GOAL_EAT

- Schedules are strung together in such a way that it allows for the desired goal to be achieved

- There are only five goals for AI in Half-Life

When following a particular schedule the agent will not deviate from that given path. That said if certain conditions are satisfied (usually due to player intervention) and either the schedule becomes invalid or the goal changes, the NPC will deviate from that schedule.

Knowing this how would we go about creating a very basic implementation of a Finite state machine? To start we need to create a base class for all of our states to inherit from.

Header File

#pragma once

enum StateOfState

{

NONE = 0,

ACTIVE,

COMPLETE,

TERMINATED

};

class AbstractState

{

private:

StateOfState m_state;

public:

virtual void Awake();

virtual bool EnterState(); //Allows the states derived from this base state to have their own version of this function

virtual bool ExitState();

virtual void UpdateState() = 0; // forces the states derived from this base state to have their own version of this function

const StateOfState& get() const { return m_state; }

void set(const StateOfState& state) { m_state = state; }

};

AbstractState Header File

CPP File

#include "AbstractState.h"

AbstractState::AbstractState()

{

m_state = StateOfState::NONE;

}

void AbstractState::Awake()

{

m_state = StateOfState::NONE;

}

bool AbstractState::EnterState()

{

m_state = StateOfState::ACTIVE;

return true;

}

bool AbstractState::ExitState()

{

m_state = StateOfState::COMPLETE;

return true;

}

AbstractState C++ file

Because of polymorphism in C++ we can use this base class as a base state from which we can create the states that will actually interface with the state machine, like an idle state.

To control the transition of states we have to build the state machine.

Header File

#pragma once

#include <memory>

#include "AbstractState.h";

class StateMachine

{

std::unique_ptr<AbstractState> m_start_state; //A way of storing a memory address to a paticular class

std::unique_ptr<AbstractState> m_previous_state;

std::unique_ptr<AbstractState> m_current_state;

public:

void Awake();

void Start();

void Update();

#pragma region STATE MANAGMENT

void EnterState(std::unique_ptr<AbstractState>& NextState);

#pragma endregion

};

Finite State Machine Header File

C++ file

#include "StateMachine.h"

void StateMachine::Awake()

{

m_current_state = nullptr;

}

void StateMachine::Start()

{

if (m_start_state != nullptr)

{

EnterState(m_start_state);

}

}

void StateMachine::Update()

{

if (m_current_state != nullptr)

{

m_current_state->UpdateState();

}

}

void StateMachine::EnterState(std::unique_ptr<AbstractState>& NextState)

{

if (NextState == nullptr)

{

return;

}

if (m_current_state != nullptr)

{

m_current_state->ExitState();

}

m_current_state = std::move(NextState);

m_current_state->EnterState();

}

Finite State machine C++ file

As you can see even for a basic state machine with only an abstract class, there is already a lot of code and it gets complicated quite quickly. This is why finite state machines aren't so widely used now. With the large dependency on code, finite state machines become very difficult to scale, due to the extra amount of debugging that comes with it and the increase in conditions required for state transitions. There are ways around this, notably in the form of Hierarchical State Machines (HSMs). You can almost think of HSMs as an FSM split into smaller FSMs; but the problem still persists.

So what do most games use now, if not state machines? Behaviour trees.

Behaviour trees

Using behaviour trees as a way to control NPCs was first brought to light in Bungie's 2004 Xbox exclusive Halo 2 and since then they've proliferated within video games.

A behaviour tree, to put it simply, is just a list of instructions with some logic that determines which list of instructions to follow and in what order. We call it a tree because it follows the rules of the tree data structure and so starts at a single node. The tree is made up of nodes and branches, nodes being where we put logic and branches defining what list of nodes we can look at.

There are 5 different types of nodes that exist within behaviour trees:

- Root

- Only has children nodes, no parent node. In layman's terms has no nodes above it only below

- At the top of the tree

- Leaf

- At the bottom of the tree

- Has no children, only parent nodes

- Is where the 'AI' behaviours are put (e.g. go to this location, take fire or take cover).

- Selector

- Has both parents and children nodes

- Is used to determine what branch to follow based on inputs

- Sequence

- Has both parents and children nodes

- Is used to operate multiple branches in a given order

- Decorator

- Has a single child node

- Is used to modify the logic of that child node

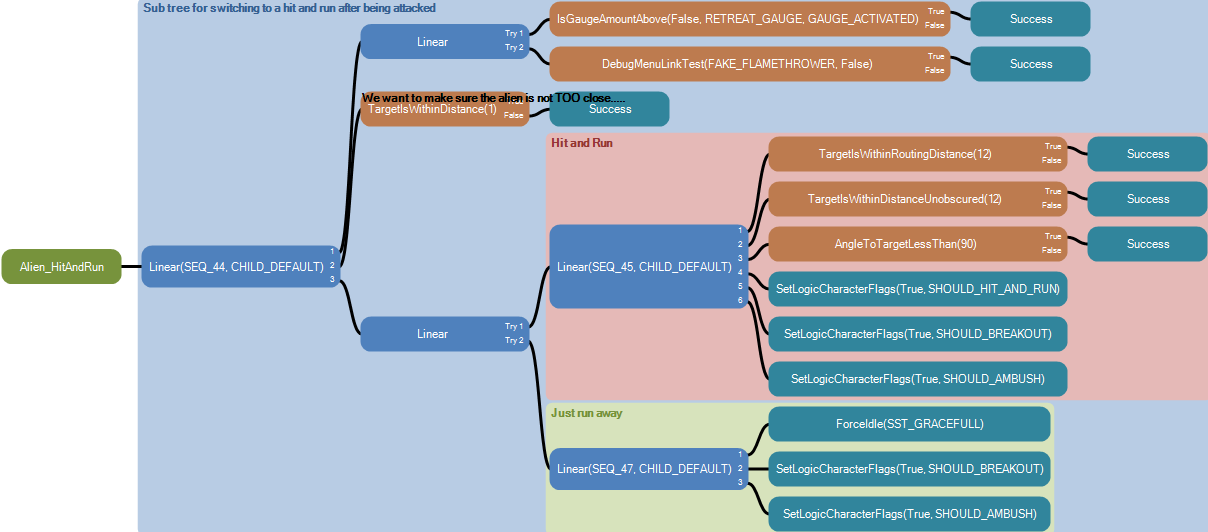

Being able to view an NPC's behaviour tree is usually quite difficult given their nature as code blocks that have been joined up graphically. However thanks to Matt Filer and his OpenCAGE mode for Alien: Isolation anyone with a PC installation can modify the game files and thus the behaviour trees.

If we look at this behaviour tree for the Hit and run behaviour of the xenomorph we can see 4 of the different node types: root, Root (green), Leaf (turquoise), Selector (orange/brown), and Sequence (Blue). In this particular tree, we have two types of sequencer nodes. The first type is the standard sequence node, in that it goes through every branch, and if one branch fails that entire node fails and the logic backtracks to a point where it can go from again. For example, in this particular tree if one of the Selectors returned 'False' within the Hit and Run section it would go back to the second type of sequence node. The second type can almost be seen as an inverse of the first, as if the branch fails it moves on to the next branch.

If you compare this to the FSM we previously looked out, it's quite clear to see why developers lean towards behaviour trees. They are just easier to read due to being able to be displayed graphically (though id Tech, the engine used in the Doom games, does this with FSMs and HSMs) and displays them in a way that makes sense. It's also much easier to read as it's very easy to follow the path of the logic and, given the parameters,, it would be possible to predict the outcome just by looking at it.

The next two concepts aren't really new ways of thinking about how to do 'AI' in games. They are more like extensions of those concepts that can either build and improve on those original concepts or just add to them.

Director-based AI

When talking about director-based 'AI', one game series should instantly come to mind: Valve and Turtle Rock Studios' Left 4 Dead. This was the first though not the only instance of a director 'AI', with other notable examples found in Alien Isolation and DOOM 2016. In all 3 games, the role of the director is to manage the pacing of the game.

In L4D the director does this by spawning the different enemy types at different points during the level, but also placing varying amounts of health kits and ammo/weapons at certain pre-defined points in the level, helping to keep each run different for the player. Like most 'AI' directors the one in L4D manages the intensity of the game making sure that it's not always going full throttle all the time.

Similarly, the director in Alien Isolation manages the intensity by what is known as the menace meter. If the Xenomorph gets up in your face too much then the director will tell it to back off and the Xenomorph will retreat to the vents. The director will also occasionally point the Xenomorph in your general direction, to avoid levels where you could completely miss it.

So how do we go about implementing 'AI' directors into video games? Well, we don't really, there's no real way of doing it as you've seen here. There are two very different implementations that interact with two very different NPC systems, HSM in L4D and behaviour tree in Alien: Isolation. It's a very custom solution, that depends heavily on the system it's being put into. But as a simplification, if you have a system that has certain meters (like the menace meter in Alien), then once certain metrics have been surpassed, the meters define the actions which affect the pacing of the game; that system could be considered a director 'AI'.

Goal-oriented action planning

Unlike director 'AI' which adds to the existing concepts, Goal-Oriented Action Planning (GOAP) takes the concept of state machines, tears it apart, removes pieces, and puts it back together, but better.

If we look back at the FSM system that we had for Half-Life, there are goals, schedules, and states. With goal-oriented action planning we can remove that idea of schedules, or at least pre-defined schedules anyway. The idea here is that rather than using pre-defined schedules we use an 'AI' planner to create one.

So how does it build the plan?

- Finds a valid goal

- Finds an action that satasfies that goal

- Finds an action that satasfies that previous action

- Repeats until current world-state is matched

- On failure repeat for a new goal

But it doesn't just pull out a random action. Instead A* (a pathfinding algorithm) is used. Other algorithms that find the shortest path between two points do exist, such as Dijkstra and greedy, but the heuristic aspect of A* makes it the pathfinding algorithm of choice. So what actually happens in the planner is they find a path from the goal's desired world state to the current world state.

With A* being A* for each node (action) has 2 costs associated with it:

- G cost (The weight of a connection between two nodes, e.g. if the time to get between two objects is 10 minutes the G cost would be 10)

- H cost (The heuristic, again using the idea of moving between to objects, the H cost would be the distance to that object as the bird flies from your node's current location)

Within GOAP

- G cost - A fixed value per action but one that can be changed if necessary

- H cost - The number of world states that still need to be satisfied

And that's it. The theory behind GOAP is really simple, so why isn't it taking off in the same way that behaviour trees or state machines did? Well, despite providing a more cinematic and believable experience, to paraphrase its original programmer Jeff Orkin who built it for Monolith productions F.E.A.R (the first game to use the system), GOAP has one small problem: it lacks a level of control that designers would like to have.

Within a standard state machine or behaviour tree, it's fairly easy to control the flow of actions for your NPC. For example, let's say you want your NPC to go to the shops, but it's currently raining. Within a traditional FSM or behaviour tree you can control the flow of logic, either through a pre-defined schedule or how you structure the tree; and so in this situation you'd want the logic to go. OPEN DOOR -> CHECK WEATHER -> PUT ON COAT -> GET ON BUS ->ARRIVE AT SHOPS. However depending on your action weighting and whether your shop sells coats, GOAP may decide to do this. OPEN DOOR -> CHECK WEATHER -> GET ON BUS -> ARRIVE AT SHOPS -> BUY COAT -> PUT ON COAT. This just wouldn't make sense and so to avoid this you'd have to start putting limitations on the planner. And at the end of the day you still have to code in all the behaviours/actions, so why even bother to create the planner if you're just going to limit what it can do.

Forza drivatars and machine learning

Have you ever been playing Forza Horizon and seen your friends driving around with you, only they aren't online and you're playing in single player? What you're seeing is a Forza Drivatar and these are something rather special.

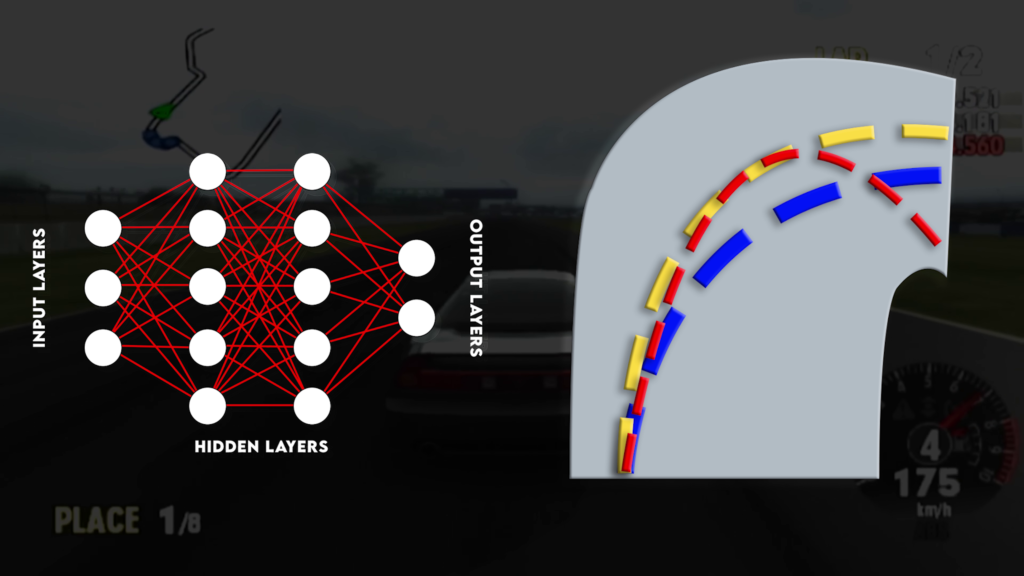

Developed by Microsoft Research Lab - Cambridge for the release of Forza on the original Xbox, their system took advantage of what was then a unique feature of all Xbox consoles, the 8GB hard drive. This drive allowed for large datasets to be made, datasets that stored information on not only your racing lines but also the characteristics of how you drove, with that car, on that track, under those specific conditions. The idea was that with enough data it would be possible to develop an AI opponent that would drive around a track as you would, but it wouldn't even need to be the same track or the same car. So how do they do this? Well, they use artificial neural networks.

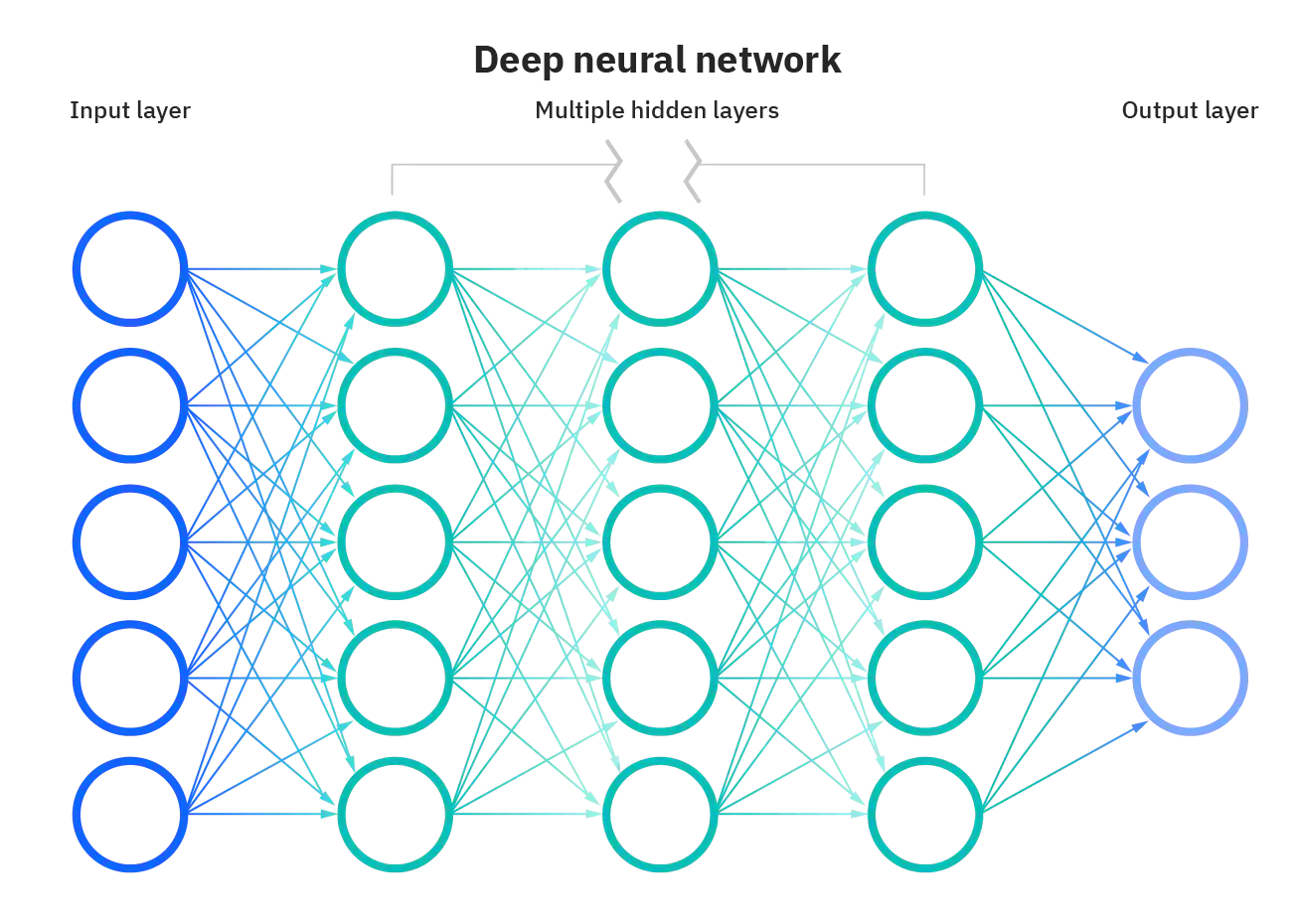

In a traditional neural network, data goes in within the input layer and gets spit out the other side after passing through multiple neurons. Each of those neurons has its own connection weights and biases, and generally, for similar inputs, the model will output similar results. This is fine if every corner of the same classification was the exactly the same, pixel for pixel. But it's not and so this is where Bayesian neural networks come into play.

Unlike traditional neural networks, within Bayesian networks, each connection is assigned a probability distribution and the network runs multiple times, with each output having a confidence value that relates to how many times that particular pattern has appeared in the data set. So to put that into the context of Forza, the network produces a load of different lines and chooses the one that best fits how you would drive.

Unfortunately, whilst this approach worked, it had difficulty adapting to new situations. So with the launch of Forza Motorsport 5 and the transition of the Drivatar training to be on the Xbox Live servers it was clear the system needed updating.

In an interview with Ars Technica, creative lead Dan Greenawalt said "That's what AI is. You feed in data and it feeds out what it wants to do, it doesn't tell you why, it's like a 2-year-old." What happened when they transitioned to Xbox live servers, is they got a huge influx of data and trained a model that was only ever trained off the data from a single console, resulting in what can only be described as interesting results. Greenwalt went on to say "things got fast in ways we didn't expect, they got slow in ways we didn't expect and weird inaccuracies showed up in our system that we didn't really expect."

Because the data tracks how you drive, it also picks up all your negative driving techniques, so if you were an aggressive driver and collided a lot it would pick this up. Likewise, it would also pick up if you abused the track's limits. Obviously, this would and did cause frustration with players and so they ended up having to create this hybrid system that takes aspects from traditional game AI and layers it over the top of the black box which is the neural network.

The other issue they had wasn't so much of an issue with the AI but more of an issue with game design and how the AI fits in, because all games want you to win. ("All games? But what about Dark Souls?" I hear you ask; yes even Dark Souls want you to win, it just goes about it in a funny way.) And to keep that principle, to keep that power fantasy alive, most racing games implement a technique known as rubber banding, where if you are falling behind the game will limit the performance of the AI and will increase the performance of the AI if you get too far ahead.

By doing this, however, the Drivatars aren't going to drive like your friend does. To get around this, they modify the car within the limits of the physics engine so that it's just the input values for the neural network that have changed, meaning the Drivatar still drives how your friend would drive in that set of conditions.

What began as a novel concept for driver AI in a videogame has turned into one of the most impressive implementations of machine learning (and now deep learning with the advent of Forza Motorsports 5) that's ever been seen in video games. It's a system that is constantly being updated and improved on with every lap that gets uploaded to the servers.

So, outside of Forza which uses actual AI techniques, video game AI is just all smoke and mirrors, working as hard as it can to pull one over you and keep you completely immersed in the world that the developers have created. And with machine learning making its way into video games with the like of Forza Drivatars and more recently Gran Turismo's Sophy the future of AI in video games is looking very promising. Who knows, maybe in 10 years' time, we'll be playing the most intense game of hide 'n seek with a Xenomorph.

For further reading on the topic I've covered here, I'd highly recommend checking out AI and games. A lot of what I know about video game AI has come from this channel, and you may learn new things too!